Why AI development is going to get even faster. (Yes, really!)

by Jack Clark

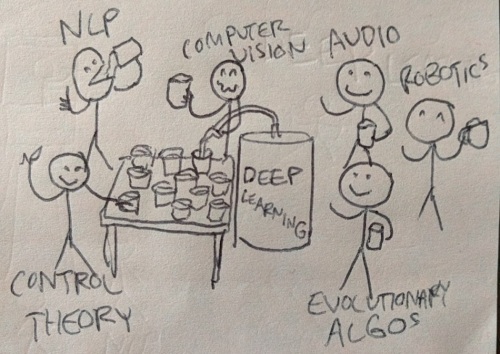

Artist’s depiction of the surprising popularity of deep learning techniques across a variety of disciplines.

The pace of development of artificial intelligence is going to get faster. And not for the typical reasons — More money, interest from megacompanies, faster computers, cheap&huge data, and so on. Now it’s about to accelerate because other fields are starting to mesh with it, letting insights from one feed into the other, and vice versa.

That’s the gist of a new book by David Beyer, which sees him interview 10 experts about artificial intelligence. It’s free. READ IT. The main takeaway is that neural networks are drawing sustained attention from researchers across the academic spectrum. “Pretty much any researcher who has been to the NIPS Conference [a big AI conference] is beginning to evaluate neural networks for their application,” says Reza Zadeh, a consulting professor at Stanford. That’s going to have a number of weird effects.

(Background: neural networks come in a huge variety of flavors — RNNs! CNNS! LSTMs! GANs! Various other acronyms! — but people like them because they basically let you chop out a bunch of hand-written code in favor of feeding inputs and outputs into neural nets and getting computers to come up with the stuff in-between. In technical terms, they infer functions. In the late 00’s some clever academics rebranded a subset of neural network techniques to ‘Deep Learning’, which just means a stack of different nets on top of one another, forming a sort of computationally-brilliant lasagne. When I say ‘machine learning’ in this blogpost, I’m referring to some kind of neural network technique.)

Robotics has just started to get into neural networks. This has already sped up development. This year, Google demonstrated a system that teaches robotic arms to learn how to pick up objects of any size and shape. That work was driven by research conducted last year at Pieter Abbeel’s lab in Berkeley, which saw scientists combine two neural network-based techniques (reinforcement learning and deep learning) with robotics to create machines that could learn faster. Robots are also getting better eyes, thanks to deep learning as well. “Armed with the latest deep learning packages, we can begin to recognize objects in previously impossible ways,” says Daniela Rus, a professor in CSAIL at MIT who works on self-driving cars.

More distant communities have already adapted the technology to their own needs. Brendan Frey runs a company called Deep Genomics, which uses machine learning to analyze the genome. Part of the motivation for that is that humans are “very bad” at interpreting the genome, he says. That’s because we spent hundreds of thousands of years evolving finely-tuned pattern detectors for things we saw and heard, like tigers. Because we never had to hunt the genome, or listen for its fearsome sounds, we didn’t develop very good inbuilt senses for analyzing it. Modern machine learning approaches give us a way to get computers to analyze this type of mind-bending data for us. “We must turn to truly superhuman artificial intelligence to overcome our limitations,” he says.

Others are using their own expertise to improve machine learning. Risto Miikkulainen is an evolutionary computing expert who is trying to figure out how to evolve more efficient neural networks, and develop systems that can help transfer insights from one neural network into another, similar to how reading books lets us extract some data from a separate object (the text) and port into our own grey-matter. Benjamin Recht, a professor at UC Berkeley, has spent years studying control theory — technology that goes into autonomous capabilities in modern aircraft and machines. He thinks that fusing control theory and neural networks “might enable safe autonomous vehicles that can navigate complex terrains. Or could assist us in diagnostics and treatments in health care”.

One of the reasons why so many academics from so many different disciplines are getting involved is that deep learning, though complex, is surprisingly adaptable. “Everybody who tries something seems to get things to work beyond what they expected,” says Pieter Abbeel. “Usually it’s the other way around.” Oriol Vinyals, who came up with some of the technology that sits inside Google Inbox’s ‘Smart Reply‘ feature, developed a neural network-based algorithm to plot the shortest routes between various points on a map. “In a rather magical moment, we realized it worked,” he says. This generality not only encourages more experimentation but speeds up the development loop as well.

(One challenge: though neural networks generalize very well, we still lack a decent theory to describe them, so much of the field proceeds by intuition. This is both cool and extremely bad. “It’s amazing to me that these very vague, intuitive arguments turned out to correspond to what is actually happening,” says Ilya Sutskever, research director at OpenAI., of the move to create ever-deeper neural network architectures. Work needs to be done here. “Theory often follows experiment in machine learning,” says Yoshua Bengio, one of the founders of the field. Modern AI researchers are like people trying to invent flying machines without the formulas of aerodynamics, says Yann Lecun, Facebook’s head of AI.)

The trillion-dollar (yes, really) unknown in AI is how we get to unsupervised learning — computers that can learn about the world and carry out actions without getting explicit rewards or signals. “How do you even think about unsupervised learning?” wonders Sutskever. One potential area of research is generative models, he says. OpenAI just hired Alec Radford, who did some great work on GANs. Others are looking at this as well, including Ruslan Salakhutinov at the University of Toronto. Yoshua Bengio thinks it’s important to develop generative techniques, letting computers ‘dream’ and therefore reason about the world. “Our machines already dream, but in a blurry way,” he says. “They’re not yet crisp and content-rich like human dreams and imagination, a facility we use in daily life to imagine those things which we haven’t actually lived.”

The Deep Learning tsunami, tsunami-ing.

My personal intuition is that deep learning is going to make its way into an ever-expanding number of domains. Given sufficiently large datasets, powerful computers, and the interest of subject-area experts, the Deep Learning tsunami (see picture), looks set to wash over an ever-larger number of disciplines. Buy a swimsuit! (And read the book!)

[…] https://mappingbabel.wordpress.com/2016/04/03/why-ai-development-is-going-to-get-even-faster-yes-rea… […]

Reblogged this on aainslie.

When you write about unsupervised learning, I get the feeling that you’re referring to something other than straightforward classification. Can you expand on that?

Hi there. Unsupervised learning is going to be key to doing classification in the real world, as it will let AIs integrate new entities into existing models. It will also play a huge role in the development of multi-step inference systems, like NLP engines which are connected to actuators. A hypothetical example which I think is probably being worked on right now is how you get a big text-processing engine to proactively use the right APIs to pull data in and out of other stores in response to queries. That will require a degree of improvisation on the part of the computer system, and will likely involve a combination of ‘classic’ AI techniques, like deep learning & reinforcement learning, and then unsupervised learning to make it less brittle.

But as people in the book note, we don’t really have good unsupervised learning approaches yet, so it’s quite difficult to anticipate all the ways it will be used. There’s just a broad intuition among experts that it is going to be tremendously important.

Every generation of AI workers have deFined AI in terms of the current new theory. This might lead us to ignore well established solutions. For example NLP is more than capable of doing translation and semantic parsing yet this capability seems to be ignored by those pursuing a deep learning solution to these problems.I consider progress in NLP as being critical to AGI.

The link to the book appears to be broken…

Odd. It appears to be working for me. What error are you getting?

Hmm, maybe in blind, but to me it opens the main website page http://www.oreilly.com and nothing more…

Reblogged this on Jingchu and commented:

This blog resonates with me on my work. It’s amazing to see how effective DQN can be in network control tasks.

[…] Why AI development is going to get even faster. […]

[…] My personal intuition is that deep learning is going to make its way into an ever-expanding number of domains. Given sufficiently large datasets, powerful computers, and the interest of subject-area experts, the Deep Learning tsunami, looks set to wash over an ever-larger number of disciplines. – Jack Clark […]

[…] 5. Why AI development is going to get even faster. (Yes, really!) | Mapping Babel […]

After coding AI since 1993, I am preparing to release a concept-based AI in Forth for amateur roboticists to develop further.

[…] Read More […]

Reblogged this on Vigneshwer Dhinakaran and commented:

Good insights for latest happening in deep learning field

[…] Why AI development is going to get even faster. (Yes, really!) […]

[…] Why AI development is going to get even faster. (Yes, really!) • Mapping Babel […]

[…] Why AI development is going to get even faster. (Yes, really!) […]